Training an AI to Understand The Stock Market

How I used AI to learn and understand the previous prices of stocks. Then to make predictions on them. Does not predict the future yet.

What is AI?

There has been a lot of talk in the media recently about AI and the effects on society. But what is AI? AI is essentially Using computers to do tasks that usually need human intelligence; this is known as artificial intelligence (AI). Large volumes of data can be processed by AI in ways that humans cannot. The objective for AI is to be able to perform things like notice patterns, make decisions, and evaluate like humans.

In this use case an LSTM algorithm is being used to learn based off of the previous trends of the stock market. These trends are them compilied into a model and are then used to make a prediction. Currently I am having issues with predicting future markets.

What does LSTM mean?

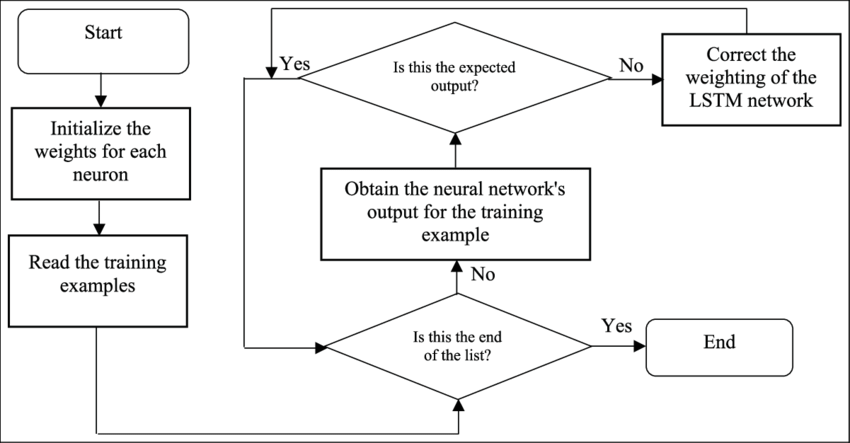

This is great, but what actually is a LSTM algortimh. Long short-term memory networks, or LSTMs, are employed in deep learning. Many recurrent neural networks (RNNs) are able to learn long-term dependencies, particularly in tasks involving sequence prediction. Besides from singular data points like photos, LSTM contains feedback connections, making it capable of processing the complete sequence of data. This has uses in machine translation and speech recognition, among others. A unique version of RNN called LSTM exhibits exceptional performance on a wide range of issues.

Due to its ability to understand long-term connections between data time steps, LSTMs are frequently used to learn, analyse, and categorise sequential data. Sentiment analysis, language modelling, speech recognition, and video analysis are examples of common LSTM applications.

Breakdown

Here is the full script we use to predict and train off of previous prices.

import yfinance as yf

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LSTM

import tensorflow as tf

# Set the stock ticker symbol

stock = "AAPL"

# Get the historical data for the stock

data = yf.download(stock, period="max")

# Create a new dataframe with only the 'Close' column

close_data = data.filter(['Close'])

# Convert the dataframe to a numpy array

close_dataset = close_data.values

# Get the number of rows to train the model on

training_data_len = int(np.ceil(len(close_dataset) * 0.8))

# Scale the data

scaler = MinMaxScaler(feature_range=(0, 1))

scaled_data = scaler.fit_transform(close_dataset)

# Create the training data set

train_data = scaled_data[0:training_data_len, :]

# Split the data into x_train and y_train data sets

x_train = []

y_train = []

for i in range(60, len(train_data)):

x_train.append(train_data[i-60:i, 0])

y_train.append(train_data[i, 0])

# Convert the x_train and y_train to numpy arrays

x_train, y_train = np.array(x_train), np.array(y_train)

# Reshape the data

x_train = np.reshape(x_train, (x_train.shape[0], x_train.shape[1], 1))

# Build the LSTM model

model = Sequential()

model.add(LSTM(50, return_sequences=True, input_shape=(x_train.shape[1], 1)))

model.add(LSTM(50, return_sequences=False))

model.add(Dense(25))

model.add(Dense(1))

# Compile the model

model.compile(optimizer='adam', loss='mean_squared_error')

# Train the model

model.fit(x_train, y_train, batch_size=1, epochs=1)

# Create the testing data set

test_data = scaled_data[training_data_len - 60: , :]

# Create the x_test and y_test data sets

x_test = []

y_test = close_dataset[training_data_len:, :]

for i in range(60, len(test_data)):

x_test.append(test_data[i-60:i, 0])

# Convert the data to a numpy array

x_test = np.array(x_test)

# Reshape the data

x_test = np.reshape(x_test, (x_test.shape[0], x_test.shape[1], 1))

# Get the models predicted price values

predictions = model.predict(x_test)

predictions = scaler.inverse_transform(predictions)

# Get the root mean squared error (RMSE)

rmse = np.sqrt(np.mean(predictions - y_test)**2)

print('RMSE:', rmse)

# Plot the data

train = close_data[:training_data_len]

valid = close_data[training_data_len:]

valid['Predictions'] = predictions

plt.figure(figsize=(16,8))

plt.title('LSTM Model')

plt.xlabel('Date', fontsize=18)

plt.ylabel('Close Price USD ($)', fontsize=18)

plt.plot(train['Close'])

plt.plot(valid[['Close', 'Predictions']])

plt.legend(['Train', 'Validation', 'Predictions'], loc='lower right')

plt.show()

model.save('lstm_model.h5',save_format='h5')

To break down this code we need to split it into many segements. To do this we can classify the different segments based off the task that they do.

Importing Libaries

import yfinance as yf

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LSTM

import tensorflow as tf

As the title suggests this code import needed libaries for the code to function. yfinance is the library for accessing Yahoo Finance data, this is how we get our stock prices. Pandas is the library for data manipulation and analysis, we use this to shape our data into arrays. Numpy is the library for numerical computing, we use this also for arrays among other things. matplotlib.pyplot is the library for creating visualizations. MinMaxScaler is the library for scaling data, this is used for preprocessing. Sequential is the library for creating neural network models. Dense and LSTM is used as the source of the LSTM model. They are libraries for defining the architecture of neural networks. Finally Tensorflow is the library for building machine learning models.local

Get Stock Statistics

# Set the stock ticker symbol

stock = "AAPL"

# Get the historical data for the stock

data = yf.download(stock, period="max")

# Create a new dataframe with only the 'Close' column

close_data = data.filter(['Close'])

This segment of code collects and formats the stock prices of a specific company. In this case AAPL refers to Apple Inc’s Stock. It downloads the stock prices from the maximum period they have been avaliable. It then filters out all of the other prices and gets the Close price.

Create A Dataframe

# Convert the dataframe to a numpy array

close_dataset = close_data.values

# Get the number of rows to train the model on

training_data_len = int(np.ceil(len(close_dataset) * 0.8))

As you can see this will convert our closed dataset into an numpy array that will be used to train our model. This will then be stripped down so the most recent 80% of the dataset will be used to train the model. This is what the training_data_len variable does.

Building the model

# Reshape the data

x_train = np.reshape(x_train, (x_train.shape[0], x_train.shape[1], 1))

# Build the LSTM model

model = Sequential()

model.add(LSTM(50, return_sequences=True, input_shape=(x_train.shape[1], 1)))

model.add(LSTM(50, return_sequences=False))

model.add(Dense(25))

model.add(Dense(1))

As you can see the first line of code is used to reshape the data into a usable “shape”. After this we have a number of lines that create and define the data input for the LSTM Model. This simply inputs the shape that was fixed in the first line. This will also return seqences which will be used later. It also defines model density.

Compiling and Training The Model

# Compile the model

model.compile(optimizer='adam', loss='mean_squared_error')

# Train the model

model.fit(x_train, y_train, batch_size=1, epochs=1)

The first line compiles the model with a selected optimizer to make it go either slower and be less accurate or faster and less accurate. Accuracy is known as a loss percentage. The higher the percentage. The more inaccuracy. This is then fit and trained with the stock data. The batch_size is how many times the model will train. Then epochs is how many times it will loop the process.

Predicting Past Prices

# Create the testing data set

test_data = scaled_data[training_data_len - 60: , :]

# Create the x_test and y_test data sets

x_test = []

y_test = close_dataset[training_data_len:, :]

for i in range(60, len(test_data)):

x_test.append(test_data[i-60:i, 0])

# Convert the data to a numpy array

x_test = np.array(x_test)

# Reshape the data

x_test = np.reshape(x_test, (x_test.shape[0], x_test.shape[1], 1))

# Get the models predicted price values

predictions = model.predict(x_test)

predictions = scaler.inverse_transform(predictions)

The first line compiles the recently trained model into a data set taht can be used ot make predictions. We then create X and Y data sets. That will be used to train and also to plot later on. We convert the array to avoid formatting issues. We also once again reshape the array. We then use our newly trained model to make a prediction the the day that you run it.

Plot and save

# Get the root mean squared error (RMSE)

rmse = np.sqrt(np.mean(predictions - y_test)**2)

print('RMSE:', rmse)

# Plot the data

train = close_data[:training_data_len]

valid = close_data[training_data_len:]

valid['Predictions'] = predictions

plt.figure(figsize=(16,8))

plt.title('LSTM Model')

plt.xlabel('Date', fontsize=18)

plt.ylabel('Close Price USD ($)', fontsize=18)

plt.plot(train['Close'])

plt.plot(valid[['Close', 'Predictions']])

plt.legend(['Train', 'Validation', 'Predictions'], loc='lower right')

plt.show()

model.save('lstm_model.h5',save_format='h5')

As you can see above this code plots the data onto a chart using the pychart module. This loops throguh the array of trained prices and plots them along with the Close prices for the past (x) years. This then shows the data and saves the model to be used in another script to be used later. This will be used to predict the next 60 or so days and will be explained in a later blog post.17T11